# Description

Makes IR the default evaluator, in preparation to remove the non-IR

evaluator in a future release.

# User-Facing Changes

* Remove `NU_USE_IR` option

* Add `NU_DISABLE_IR` option

* IR is enabled unless `NU_DISABLE_IR` is set

# After Submitting

- [ ] release notes

# Description

The meaning of the word usage is specific to describing how a command

function is *used* and not a synonym for general description. Usage can

be used to describe the SYNOPSIS or EXAMPLES sections of a man page

where the permitted argument combinations are shown or example *uses*

are given.

Let's not confuse people and call it what it is a description.

Our `help` command already creates its own *Usage* section based on the

available arguments and doesn't refer to the description with usage.

# User-Facing Changes

`help commands` and `scope commands` will now use `description` or

`extra_description`

`usage`-> `description`

`extra_usage` -> `extra_description`

Breaking change in the plugin protocol:

In the signature record communicated with the engine.

`usage`-> `description`

`extra_usage` -> `extra_description`

The same rename also takes place for the methods on

`SimplePluginCommand` and `PluginCommand`

# Tests + Formatting

- Updated plugin protocol specific changes

# After Submitting

- [ ] update plugin protocol doc

# Description

Allows `Stack` to have a modified local `Config`, which is updated

immediately when `$env.config` is assigned to. This means that even

within a script, commands that come after `$env.config` changes will

always see those changes in `Stack::get_config()`.

Also fixed a lot of cases where `engine_state.get_config()` was used

even when `Stack` was available.

Closes#13324.

# User-Facing Changes

- Config changes apply immediately after the assignment is executed,

rather than whenever config is read by a command that needs it.

- Potentially slower performance when executing a lot of lines that

change `$env.config` one after another. Recommended to get `$env.config`

into a `mut` variable first and do modifications, then assign it back.

- Much faster performance when executing a script that made

modifications to `$env.config`, as the changes are only parsed once.

# Tests + Formatting

All passing.

# After Submitting

- [ ] release notes

# Description

This PR introduces a new `Signals` struct to replace our adhoc passing

around of `ctrlc: Option<Arc<AtomicBool>>`. Doing so has a few benefits:

- We can better enforce when/where resetting or triggering an interrupt

is allowed.

- Consolidates `nu_utils::ctrl_c::was_pressed` and other ad-hoc

re-implementations into a single place: `Signals::check`.

- This allows us to add other types of signals later if we want. E.g.,

exiting or suspension.

- Similarly, we can more easily change the underlying implementation if

we need to in the future.

- Places that used to have a `ctrlc` of `None` now use

`Signals::empty()`, so we can double check these usages for correctness

in the future.

# Description

Provides the ability to use http commands as part of a pipeline.

Additionally, this pull requests extends the pipeline metadata to add a

content_type field. The content_type metadata field allows commands such

as `to json` to set the metadata in the pipeline allowing the http

commands to use it when making requests.

This pull request also introduces the ability to directly stream http

requests from streaming pipelines.

One other small change is that Content-Type will always be set if it is

passed in to the http commands, either indirectly or throw the content

type flag. Previously it was not preserved with requests that were not

of type json or form data.

# User-Facing Changes

* `http post`, `http put`, `http patch`, `http delete` can be used as

part of a pipeline

* `to text`, `to json`, `from json` all set the content_type metadata

field and the http commands will utilize them when making requests.

# Description

This PR allows byte streams to optionally be colored as being

specifically binary or string data, which guarantees that they'll be

converted to `Binary` or `String` appropriately on `into_value()`,

making them compatible with `Type` guarantees. This makes them

significantly more broadly usable for command input and output.

There is still an `Unknown` type for byte streams coming from external

commands, which uses the same behavior as we previously did where it's a

string if it's UTF-8.

A small number of commands were updated to take advantage of this, just

to prove the point. I will be adding more after this merges.

# User-Facing Changes

- New types in `describe`: `string (stream)`, `binary (stream)`

- These commands now return a stream if their input was a stream:

- `into binary`

- `into string`

- `bytes collect`

- `str join`

- `first` (binary)

- `last` (binary)

- `take` (binary)

- `skip` (binary)

- Streams that are explicitly binary colored will print as a streaming

hexdump

- example:

```nushell

1.. | each { into binary } | bytes collect

```

# Tests + Formatting

I've added some tests to cover it at a basic level, and it doesn't break

anything existing, but I do think more would be nice. Some of those will

come when I modify more commands to stream.

# After Submitting

There are a few things I'm not quite satisfied with:

- **String trimming behavior.** We automatically trim newlines from

streams from external commands, but I don't think we should do this with

internal commands. If I call a command that happens to turn my string

into a stream, I don't want the newline to suddenly disappear. I changed

this to specifically do it only on `Child` and `File`, but I don't know

if this is quite right, and maybe we should bring back the old flag for

`trim_end_newline`

- **Known binary always resulting in a hexdump.** It would be nice to

have a `print --raw`, so that we can put binary data on stdout

explicitly if we want to. This PR doesn't change how external commands

work though - they still dump straight to stdout.

Otherwise, here's the normal checklist:

- [ ] release notes

- [ ] docs update for plugin protocol changes (added `type` field)

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

This PR introduces a `ByteStream` type which is a `Read`-able stream of

bytes. Internally, it has an enum over three different byte stream

sources:

```rust

pub enum ByteStreamSource {

Read(Box<dyn Read + Send + 'static>),

File(File),

Child(ChildProcess),

}

```

This is in comparison to the current `RawStream` type, which is an

`Iterator<Item = Vec<u8>>` and has to allocate for each read chunk.

Currently, `PipelineData::ExternalStream` serves a weird dual role where

it is either external command output or a wrapper around `RawStream`.

`ByteStream` makes this distinction more clear (via `ByteStreamSource`)

and replaces `PipelineData::ExternalStream` in this PR:

```rust

pub enum PipelineData {

Empty,

Value(Value, Option<PipelineMetadata>),

ListStream(ListStream, Option<PipelineMetadata>),

ByteStream(ByteStream, Option<PipelineMetadata>),

}

```

The PR is relatively large, but a decent amount of it is just repetitive

changes.

This PR fixes#7017, fixes#10763, and fixes#12369.

This PR also improves performance when piping external commands. Nushell

should, in most cases, have competitive pipeline throughput compared to,

e.g., bash.

| Command | Before (MB/s) | After (MB/s) | Bash (MB/s) |

| -------------------------------------------------- | -------------:|

------------:| -----------:|

| `throughput \| rg 'x'` | 3059 | 3744 | 3739 |

| `throughput \| nu --testbin relay o> /dev/null` | 3508 | 8087 | 8136 |

# User-Facing Changes

- This is a breaking change for the plugin communication protocol,

because the `ExternalStreamInfo` was replaced with `ByteStreamInfo`.

Plugins now only have to deal with a single input stream, as opposed to

the previous three streams: stdout, stderr, and exit code.

- The output of `describe` has been changed for external/byte streams.

- Temporary breaking change: `bytes starts-with` no longer works with

byte streams. This is to keep the PR smaller, and `bytes ends-with`

already does not work on byte streams.

- If a process core dumped, then instead of having a `Value::Error` in

the `exit_code` column of the output returned from `complete`, it now is

a `Value::Int` with the negation of the signal number.

# After Submitting

- Update docs and book as necessary

- Release notes (e.g., plugin protocol changes)

- Adapt/convert commands to work with byte streams (high priority is

`str length`, `bytes starts-with`, and maybe `bytes ends-with`).

- Refactor the `tee` code, Devyn has already done some work on this.

---------

Co-authored-by: Devyn Cairns <devyn.cairns@gmail.com>

# Description

Does some misc changes to `ListStream`:

- Moves it into its own module/file separate from `RawStream`.

- `ListStream`s now have an associated `Span`.

- This required changes to `ListStreamInfo` in `nu-plugin`. Note sure if

this is a breaking change for the plugin protocol.

- Hides the internals of `ListStream` but also adds a few more methods.

- This includes two functions to more easily alter a stream (these take

a `ListStream` and return a `ListStream` instead of having to go through

the whole `into_pipeline_data(..)` route).

- `map`: takes a `FnMut(Value) -> Value`

- `modify`: takes a function to modify the inner stream.

# Description

Removes lazy records from the language, following from the reasons

outlined in #12622. Namely, this should make semantics more clear and

will eliminate concerns regarding maintainability.

# User-Facing Changes

- Breaking change: `lazy make` is removed.

- Breaking change: `describe --collect-lazyrecords` flag is removed.

- `sys` and `debug info` now return regular records.

# After Submitting

- Update nushell book if necessary.

- Explore new `sys` and `debug info` APIs to prevent them from taking

too long (e.g., subcommands or taking an optional column/cell-path

argument).

# Description

`Value` describes the types of first-class values that users and scripts

can create, manipulate, pass around, and store. However, `Block`s are

not first-class values in the language, so this PR removes it from

`Value`. This removes some unnecessary code, and this change should be

invisible to the user except for the change to `scope modules` described

below.

# User-Facing Changes

Breaking change: the output of `scope modules` was changed so that

`env_block` is now `has_env_block` which is a boolean value instead of a

`Block`.

# After Submitting

Update the language guide possibly.

# Description

This adds a `SharedCow` type as a transparent copy-on-write pointer that

clones to unique on mutate.

As an initial test, the `Record` within `Value::Record` is shared.

There are some pretty big wins for performance. I'll post benchmark

results in a comment. The biggest winner is nested access, as that would

have cloned the records for each cell path follow before and it doesn't

have to anymore.

The reusability of the `SharedCow` type is nice and I think it could be

used to clean up the previous work I did with `Arc` in `EngineState`.

It's meant to be a mostly transparent clone-on-write that just clones on

`.to_mut()` or `.into_owned()` if there are actually multiple

references, but avoids cloning if the reference is unique.

# User-Facing Changes

- `Value::Record` field is a different type (plugin authors)

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- [ ] use for `EngineState`

- [ ] use for `Value::List`

# Description

Currently, `Range` is a struct with a `from`, `to`, and `incr` field,

which are all type `Value`. This PR changes `Range` to be an enum over

`IntRange` and `FloatRange` for better type safety / stronger compile

time guarantees.

Fixes: #11778Fixes: #11777Fixes: #11776Fixes: #11775Fixes: #11774Fixes: #11773Fixes: #11769.

# User-Facing Changes

Hopefully none, besides bug fixes.

Although, the `serde` representation might have changed.

# Description

The second `Value` is redundant and will consume five extra bytes on

each transmission of a custom value to/from a plugin.

# User-Facing Changes

This is a breaking change to the plugin protocol.

The [example in the protocol

reference](https://www.nushell.sh/contributor-book/plugin_protocol_reference.html#value)

becomes

```json

{

"Custom": {

"val": {

"type": "PluginCustomValue",

"name": "database",

"data": [36, 190, 127, 40, 12, 3, 46, 83],

"notify_on_drop": true

},

"span": {

"start": 320,

"end": 340

}

}

}

```

instead of

```json

{

"CustomValue": {

...

}

}

```

# After Submitting

Update plugin protocol reference

# Description

When implementing a `Command`, one must also import all the types

present in the function signatures for `Command`. This makes it so that

we often import the same set of types in each command implementation

file. E.g., something like this:

```rust

use nu_protocol::ast::Call;

use nu_protocol::engine::{Command, EngineState, Stack};

use nu_protocol::{

record, Category, Example, IntoInterruptiblePipelineData, IntoPipelineData, PipelineData,

ShellError, Signature, Span, Type, Value,

};

```

This PR adds the `nu_engine::command_prelude` module which contains the

necessary and commonly used types to implement a `Command`:

```rust

// command_prelude.rs

pub use crate::CallExt;

pub use nu_protocol::{

ast::{Call, CellPath},

engine::{Command, EngineState, Stack},

record, Category, Example, IntoInterruptiblePipelineData, IntoPipelineData, IntoSpanned,

PipelineData, Record, ShellError, Signature, Span, Spanned, SyntaxShape, Type, Value,

};

```

This should reduce the boilerplate needed to implement a command and

also gives us a place to track the breadth of the `Command` API. I tried

to be conservative with what went into the prelude modules, since it

might be hard/annoying to remove items from the prelude in the future.

Let me know if something should be included or excluded.

[Context on

Discord](https://discord.com/channels/601130461678272522/855947301380947968/1219425984990806207)

# Description

- Rename `CustomValue::value_string()` to `type_name()` to reflect its

usage better.

- Change print behavior to always call `to_base_value()` first, to give

the custom value better control over the output.

- Change `describe --detailed` to show the type name as the subtype,

rather than trying to describe the base value.

- Change custom `Type` to use `type_name()` rather than `typetag_name()`

to make things like `PluginCustomValue` more transparent

One question: should `describe --detailed` still include a description

of the base value somewhere? I'm torn on it, it seems possibly useful

for some things (maybe sqlite databases?), but having `describe -d` not

include the custom type name anywhere felt weird. Another option would

be to add another method to `CustomValue` for info to be displayed in

`describe`, so that it can be more type-specific?

# User-Facing Changes

Everything above has implications for printing and `describe` on custom

values

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# Description

This is a follow up to

https://github.com/nushell/nushell/pull/11621#issuecomment-1937484322

Also Fixes: #11838

## About the code change

It applys the same logic when we pass variables to external commands:

0487e9ffcb/crates/nu-command/src/system/run_external.rs (L162-L170)

That is: if user input dynamic things(like variables, sub-expression, or

string interpolation), it returns a quoted `NuPath`, then user input

won't be globbed

# User-Facing Changes

Given two input files: `a*c.txt`, `abc.txt`

* `let f = "a*c.txt"; rm $f` will remove one file: `a*c.txt`.

~* `let f = "a*c.txt"; rm --glob $f` will remove `a*c.txt` and

`abc.txt`~

* `let f: glob = "a*c.txt"; rm $f` will remove `a*c.txt` and `abc.txt`

## Rules about globbing with *variable*

Given two files: `a*c.txt`, `abc.txt`

| Cmd Type | example | Result |

| ----- | ------------------ | ------ |

| builtin | let f = "a*c.txt"; rm $f | remove `a*c.txt` |

| builtin | let f: glob = "a*c.txt"; rm $f | remove `a*c.txt` and

`abc.txt`

| builtin | let f = "a*c.txt"; rm ($f \| into glob) | remove `a*c.txt`

and `abc.txt`

| custom | def crm [f: glob] { rm $f }; let f = "a*c.txt"; crm $f |

remove `a*c.txt` and `abc.txt`

| custom | def crm [f: glob] { rm ($f \| into string) }; let f =

"a*c.txt"; crm $f | remove `a*c.txt`

| custom | def crm [f: string] { rm $f }; let f = "a*c.txt"; crm $f |

remove `a*c.txt`

| custom | def crm [f: string] { rm $f }; let f = "a*c.txt"; crm ($f \|

into glob) | remove `a*c.txt` and `abc.txt`

In general, if a variable is annotated with `glob` type, nushell will

expand glob pattern. Or else, we need to use `into | glob` to expand

glob pattern

# Tests + Formatting

Done

# After Submitting

I think `str glob-escape` command will be no-longer required. We can

remove it.

# Description

Fixes: #11455

### For arguments which is annotated with `:path/:directory/:glob`

To fix the issue, we need to have a way to know if a path is originally

quoted during runtime. So the information needed to be added at several

levels:

* parse time (from user input to expression)

We need to add quoted information into `Expr::Filepath`,

`Expr::Directory`, `Expr::GlobPattern`

* eval time

When convert from `Expr::Filepath`, `Expr::Directory`,

`Expr::GlobPattern` to `Value::String` during runtime, we won't auto

expanded the path if it's quoted

### For `ls`

It's really special, because it accepts a `String` as a pattern, and it

generates `glob` expression inside the command itself.

So the idea behind the change is introducing a special SyntaxShape to

ls: `SyntaxShape::LsGlobPattern`. So we can track if the pattern is

originally quoted easier, and we don't auto expand the path either.

Then when constructing a glob pattern inside ls, we check if input

pattern is quoted, if so: we escape the input pattern, so we can run `ls

a[123]b`, because it's already escaped.

Finally, to accomplish the checking process, we also need to introduce a

new value type called `Value::QuotedString` to differ from

`Value::String`, it's used to generate an enum called `NuPath`, which is

finally used in `ls` function. `ls` learned from `NuPath` to know if

user input is quoted.

# User-Facing Changes

Actually it contains several changes

### For arguments which is annotated with `:path/:directory/:glob`

#### Before

```nushell

> def foo [p: path] { echo $p }; print (foo "~/a"); print (foo '~/a')

/home/windsoilder/a

/home/windsoilder/a

> def foo [p: directory] { echo $p }; print (foo "~/a"); print (foo '~/a')

/home/windsoilder/a

/home/windsoilder/a

> def foo [p: glob] { echo $p }; print (foo "~/a"); print (foo '~/a')

/home/windsoilder/a

/home/windsoilder/a

```

#### After

```nushell

> def foo [p: path] { echo $p }; print (foo "~/a"); print (foo '~/a')

~/a

~/a

> def foo [p: directory] { echo $p }; print (foo "~/a"); print (foo '~/a')

~/a

~/a

> def foo [p: glob] { echo $p }; print (foo "~/a"); print (foo '~/a')

~/a

~/a

```

### For ls command

`touch '[uwu]'`

#### Before

```

❯ ls -D "[uwu]"

Error: × No matches found for [uwu]

╭─[entry #6:1:1]

1 │ ls -D "[uwu]"

· ───┬───

· ╰── Pattern, file or folder not found

╰────

help: no matches found

```

#### After

```

❯ ls -D "[uwu]"

╭───┬───────┬──────┬──────┬──────────╮

│ # │ name │ type │ size │ modified │

├───┼───────┼──────┼──────┼──────────┤

│ 0 │ [uwu] │ file │ 0 B │ now │

╰───┴───────┴──────┴──────┴──────────╯

```

# Tests + Formatting

Done

# After Submitting

NaN

# Description

`Value::MatchPattern` implies that `MatchPattern`s are first-class

values. This PR removes this case, and commands must now instead use

`Expr::MatchPattern` to extract `MatchPattern`s just like how the

`match` command does using `Expr::MatchBlock`.

# User-Facing Changes

Breaking API change for `nu_protocol` crate.

# Description

Reuses the existing `Closure` type in `Value::Closure`. This will help

with the span refactoring for `Value`. Additionally, this allows us to

more easily box or unbox the `Closure` case should we chose to do so in

the future.

# User-Facing Changes

Breaking API change for `nu_protocol`.

# Description

As part of the refactor to split spans off of Value, this moves to using

helper functions to create values, and using `.span()` instead of

matching span out of Value directly.

Hoping to get a few more helping hands to finish this, as there are a

lot of commands to update :)

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

---------

Co-authored-by: Darren Schroeder <343840+fdncred@users.noreply.github.com>

Co-authored-by: WindSoilder <windsoilder@outlook.com>

# Description

This doesn't really do much that the user could see, but it helps get us

ready to do the steps of the refactor to split the span off of Value, so

that values can be spanless. This allows us to have top-level values

that can hold both a Value and a Span, without requiring that all values

have them.

We expect to see significant memory reduction by removing so many

unnecessary spans from values. For example, a table of 100,000 rows and

5 columns would have a savings of ~8megs in just spans that are almost

always duplicated.

# User-Facing Changes

Nothing yet

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect -A clippy::result_large_err` to check that

you're using the standard code style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- -c "use std testing; testing run-tests --path

crates/nu-std"` to run the tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

This PR creates a new `Record` type to reduce duplicate code and

possibly bugs as well. (This is an edited version of #9648.)

- `Record` implements `FromIterator` and `IntoIterator` and so can be

iterated over or collected into. For example, this helps with

conversions to and from (hash)maps. (Also, no more

`cols.iter().zip(vals)`!)

- `Record` has a `push(col, val)` function to help insure that the

number of columns is equal to the number of values. I caught a few

potential bugs thanks to this (e.g. in the `ls` command).

- Finally, this PR also adds a `record!` macro that helps simplify

record creation. It is used like so:

```rust

record! {

"key1" => some_value,

"key2" => Value::string("text", span),

"key3" => Value::int(optional_int.unwrap_or(0), span),

"key4" => Value::bool(config.setting, span),

}

```

Since macros hinder formatting, etc., the right hand side values should

be relatively short and sweet like the examples above.

Where possible, prefer `record!` or `.collect()` on an iterator instead

of multiple `Record::push`s, since the first two automatically set the

record capacity and do less work overall.

# User-Facing Changes

Besides the changes in `nu-protocol` the only other breaking changes are

to `nu-table::{ExpandedTable::build_map, JustTable::kv_table}`.

# Description

This adds `match` and basic pattern matching.

An example:

```

match $x {

1..10 => { print "Value is between 1 and 10" }

{ foo: $bar } => { print $"Value has a 'foo' field with value ($bar)" }

[$a, $b] => { print $"Value is a list with two items: ($a) and ($b)" }

_ => { print "Value is none of the above" }

}

```

Like the recent changes to `if` to allow it to be used as an expression,

`match` can also be used as an expression. This allows you to assign the

result to a variable, eg) `let xyz = match ...`

I've also included a short-hand pattern for matching records, as I think

it might help when doing a lot of record patterns: `{$foo}` which is

equivalent to `{foo: $foo}`.

There are still missing components, so consider this the first step in

full pattern matching support. Currently missing:

* Patterns for strings

* Or-patterns (like the `|` in Rust)

* Patterns for tables (unclear how we want to match a table, so it'll

need some design)

* Patterns for binary values

* And much more

# User-Facing Changes

[see above]

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Fixes#8002, which expands ranges `1..3` to expand to array-like when

saving and converting to json. Now,

```

> 1..3 | save foo.json

# foo.json

[

1,

2,

3

]

> 1..3 | to json

[

1,

2,

3

]

```

# User-Facing Changes

_(List of all changes that impact the user experience here. This helps

us keep track of breaking changes.)_

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- [X] `cargo fmt --all -- --check` to check standard code formatting

(`cargo fmt --all` applies these changes)

- [X] `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- [X] `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

_(Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.)_

I opened this PR to unify the run command method. It's mainly to improve

consistency across the tree.

# User-Facing Changes

None.

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Lint: `clippy::uninlined_format_args`

More readable in most situations.

(May be slightly confusing for modifier format strings

https://doc.rust-lang.org/std/fmt/index.html#formatting-parameters)

Alternative to #7865

# User-Facing Changes

None intended

# Tests + Formatting

(Ran `cargo +stable clippy --fix --workspace -- -A clippy::all -D

clippy::uninlined_format_args` to achieve this. Depends on Rust `1.67`)

This is an attempt to implement a new `Value::LazyRecord` variant for

performance reasons.

`LazyRecord` is like a regular `Record`, but it's possible to access

individual columns without evaluating other columns. I've implemented

`LazyRecord` for the special `$nu` variable; accessing `$nu` is

relatively slow because of all the information in `scope`, and [`$nu`

accounts for about 2/3 of Nu's startup time on

Linux](https://github.com/nushell/nushell/issues/6677#issuecomment-1364618122).

### Benchmarks

I ran some benchmarks on my desktop (Linux, 12900K) and the results are

very pleasing.

Nu's time to start up and run a command (`cargo build --release;

hyperfine 'target/release/nu -c "echo \"Hello, world!\""' --shell=none

--warmup 10`) goes from **8.8ms to 3.2ms, about 2.8x faster**.

Tests are also much faster! Running `cargo nextest` (with our very slow

`proptest` tests disabled) goes from **7.2s to 4.4s (1.6x faster)**,

because most tests involve launching a new instance of Nu.

### Design (updated)

I've added a new `LazyRecord` trait and added a `Value` variant wrapping

those trait objects, much like `CustomValue`. `LazyRecord`

implementations must implement these 2 functions:

```rust

// All column names

fn column_names(&self) -> Vec<&'static str>;

// Get 1 specific column value

fn get_column_value(&self, column: &str) -> Result<Value, ShellError>;

```

### Serializability

`Value` variants must implement `Serializable` and `Deserializable`, which poses some problems because I want to use unserializable things like `EngineState` in `LazyRecord`s. To work around this, I basically lie to the type system:

1. Add `#[typetag::serde(tag = "type")]` to `LazyRecord` to make it serializable

2. Any unserializable fields in `LazyRecord` implementations get marked with `#[serde(skip)]`

3. At the point where a `LazyRecord` normally would get serialized and sent to a plugin, I instead collect it into a regular `Value::Record` (which can be serialized)

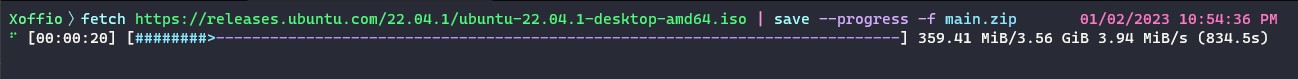

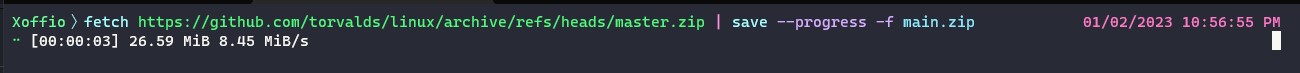

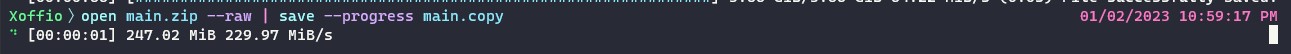

# Description

_(Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.)_

I implemented the status bar we talk about yesterday. The idea was

inspired by the progress bar of `wget`.

I decided to go for the second suggestion by `@Reilly`

> 2. add an Option<usize> or whatever to RawStream (and ListStream?) for

situations where you do know the length ahead of time

For now only works with the command `save` but after the approve of this

PR we can see how we can implement it on commands like `cp` and `mv`

When using `fetch` nushell will check if there is any `content-length`

attribute in the request header. If so, then `fetch` will send it

through the new `Option` variable in the `RawStream` to the `save`.

If we know the total size we show the progress bar

but if we don't then we just show the stats like: data already saved,

bytes per second, and time lapse.

Please let me know If I need to make any changes and I will be happy to

do it.

# User-Facing Changes

A new flag (`--progress` `-p`) was added to the `save` command

Examples:

```nu

fetch https://github.com/torvalds/linux/archive/refs/heads/master.zip | save --progress -f main.zip

fetch https://releases.ubuntu.com/22.04.1/ubuntu-22.04.1-desktop-amd64.iso | save --progress -f main.zip

open main.zip --raw | save --progress main.copy

```

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

-

I am getting some errors and its weird because the errors are showing up

in files i haven't touch. Is this normal?

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

Co-authored-by: Reilly Wood <reilly.wood@icloud.com>

This PR changes `to text` so that when given a `ListStream`, it streams

the incoming values instead of collecting them all first.

The easiest way to observe/verify this PR is to convert a list to a very

slow `ListStream` with `each`:

```bash

ls | get name | each {|n| sleep 1sec; $n} | to text

```

The `to text` output will appear 1 item at a time.

* Add failing test that list of ints and floats is List<Number>

* Start defining subtype relation

* Make it possible to declare input and output types for commands

- Enforce them in tests

* Declare input and output types of commands

* Add formatted signatures to `help commands` table

* Revert SyntaxShape::Table -> Type::Table change

* Revert unnecessary derive(Hash) on SyntaxShape

Co-authored-by: JT <547158+jntrnr@users.noreply.github.com>

* Add search terms for uppercase

* Add search terms

* Add search terms

* Change to parse

* Add search terms for from

* Add search terms for to

* Remove duplicate function

* Remove duplication of search terms

* Remove search term

* Test commands for proper names and search terms

Assert that the `Command.name()` is equal to `Signature.name`

Check that search terms are not just substrings of the command name as

they would not help finding the command.

* Clean up search terms

Remove redundant terms that just replicate the command name.

Try to eliminate substring between search terms, clean up where

necessary.